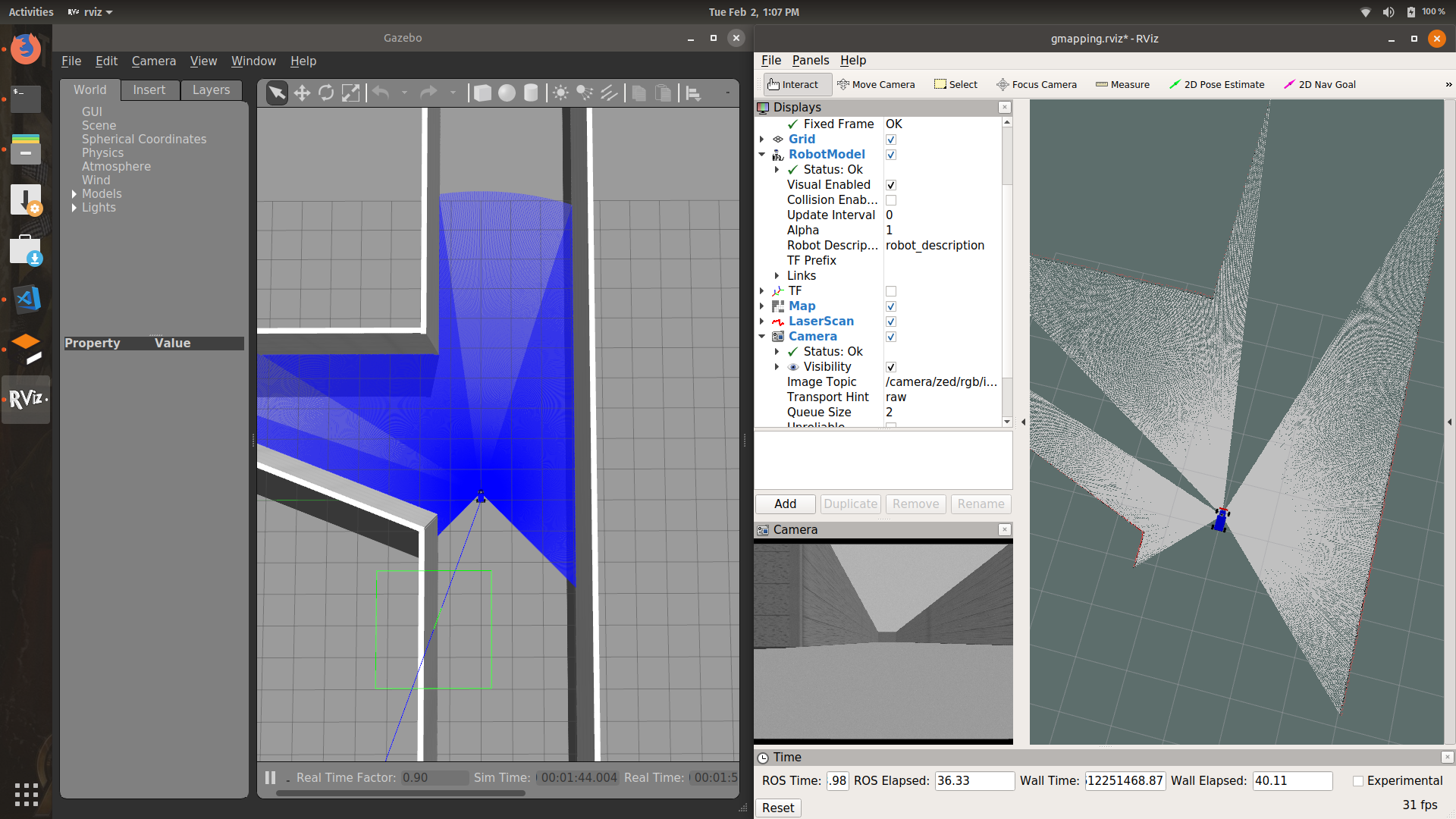

For a recent project, I had to map an environment using the MIT-Racecar robot in Gazebo. This is a log of my progress implementing Simultaneous Localisation and Mapping (SLAM) on the MIT-Racecar bot. I encountered some interesting problems, and learnt a few things while solving them. In this short post, I will summarise the steps that I used to solve the problems and get gmapping to run on the racecar_gazebo simulator.

After cloning the racecar1 and racecar_gazebo2 packages to the /src directory and building them using catkin_make, I launched the simulation using:

$ roslaunch racecar-env racecar_tunnel.launch

Of course things never work at the first try. I encountered 2 errors which were fixed by installing the following dependencies:

Now running the same environment launched Gazebo and spawned the racecar in the tunnel map.

To map the environment, I used gmapping. After setting up the launch file gmapping.launch and running it, rviz showed the warning: No map received. The terminal showed

[ WARN] [1612766930.932705394, 1106.617000000]: MessageFilter [target=odom ]: Dropped 100.00% of messages so far. Please turn the [ros.gmapping.message_filter] rosconsole logger to DEBUG for more information.

Clearly, there was some error with the odometry information. A quick

$ rosrun tf view_frames

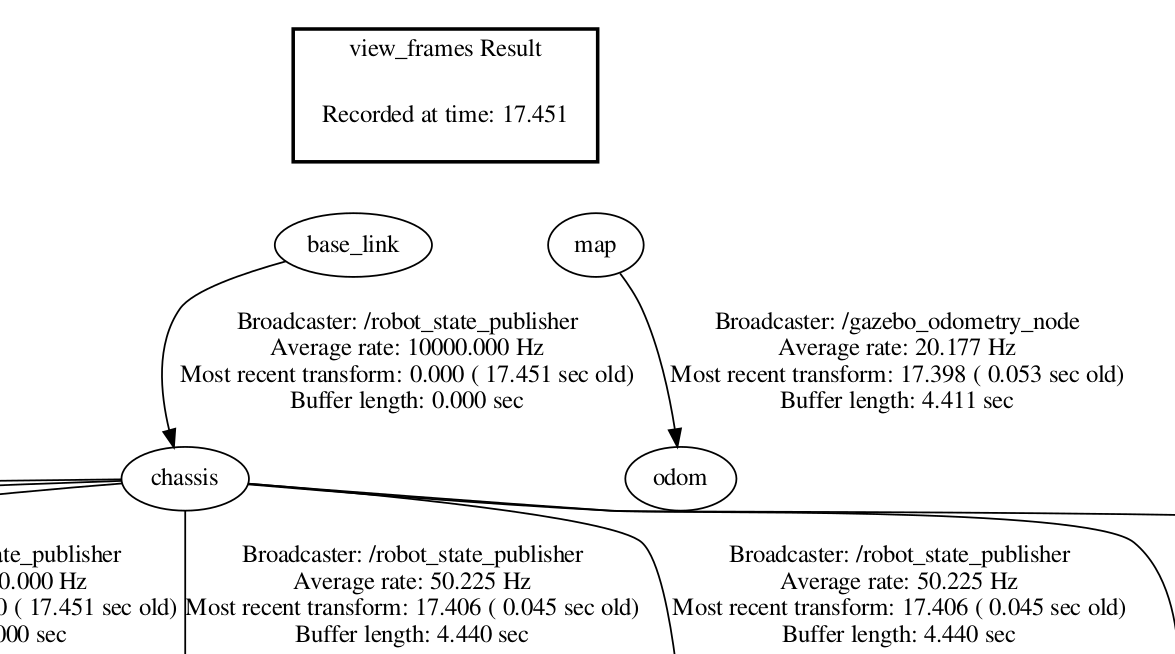

revealed that the there was no odom --> base_link transform that is necessary for gmapping to run properly. Refer REP 1053 for the convention.

There are 2 ways of fixing this error:

- Using the static transform publisher4, a transformation can be created from

odom --> base_link. This will work in the case that the robot is fixed with respect to the map. But as I wanted the racecar to explore and map the environment, this approach isn’t viable. - Dynamically publish the relationships between

odomandbase_linkframe. Generally, this is done using inputs from on-board sensors like encoders and lidar to estimate the robot’s location w.r.t. the map.

Point 2 is the ideal approach. But as the robot is running in simulation, a clever shortcut can be used: Instead of using sensor readings, one can use the simulator(gazebo)’s information of the robot pose for odometry. A closer look at the mit-racecar code revealed that that’s exactly what’s being done in the gazebo_odometry.py script located in the racecar_gazebo package.

Executing

$ rosnode info /gazebo_odometry_node

revealed that this node is subscribing to the /gazebo/link_states topic which provides the robot pose information. It’s also publishing to the /tf topic, which is where the map-->odom transform is being broadcast. This is in line with what we saw in tf tree above.

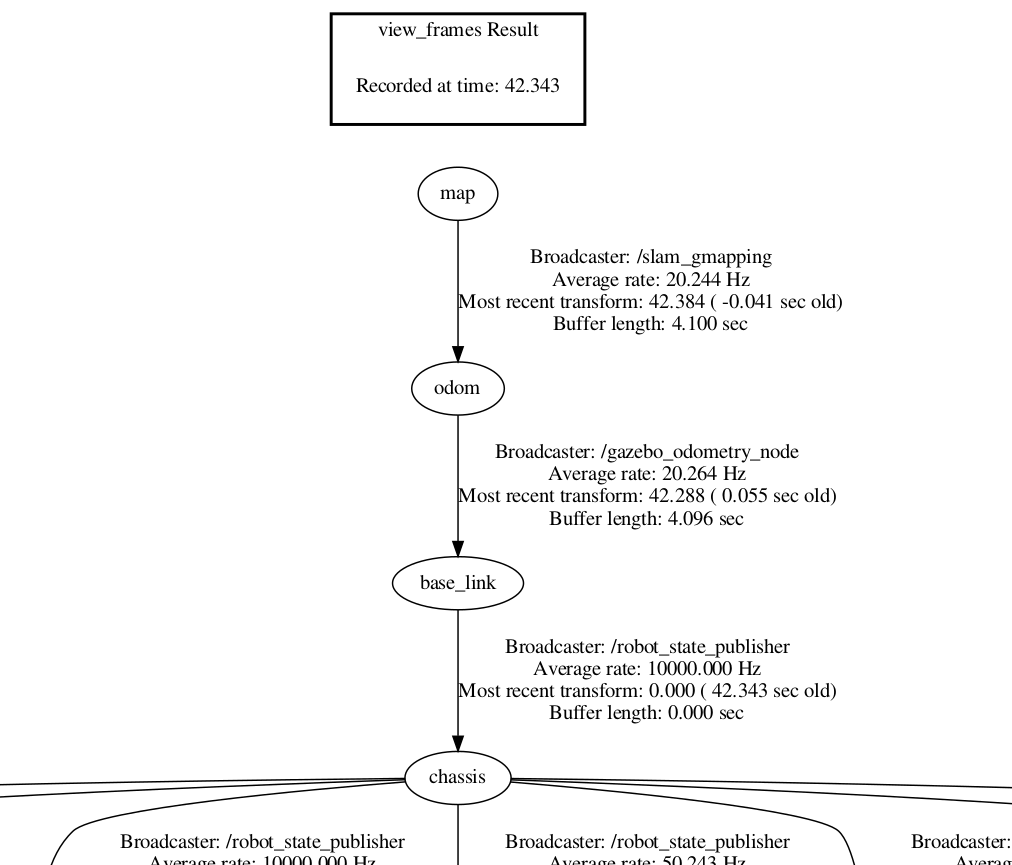

So I made a slight modification to the gazebo_odometry.py to configure the tf tree for gmapping. After looking at the ROS wiki documentation for the slam_gmapping package5, I found that for gmapping to run correctly, it needs an odom-->base_link-->laser transform. The gazebo_odometry.py node was written, (from what I understood), for navigation purposes. That’s why it transforms map-->odom, instead of odom-->base_link. So I simply changed the parent link to odom and child link to base_link in the script and voila! We now have working SLAM!

The map-->odom transform is now provided by the /slam_gmapping node. We can see the same by looking again at the tf tree:

Below is a scribd embed if you want to have a closer look at the final TF Tree.

Finally, I ran the keyboard_teleop.py script using

$ rosrun racecar_control keyboard_teleop.py

to teleoperate the racecar. Once a good map is obtained, it can be saved using

$ rosrun map_server map_saver -f mymap

With this, we come to the end of this post. The key takeaway from this post is that TF transforms are an extremely important part of ROS. It is vital that a ROS Developer be comfortable with interpreting the TF tree. To any beginner ROS users, I would recommend to always run tf view_frames node and take a look at the TF tree when implementing someone else’s package.

Next I will be implementing the ROS Navigation Stack6 on the mit-racecar robot. I will post here if I encounter any interesting problems.